AI Alignment Posts

Popular Comments

Recent Discussion

This work was produced as part of Neel Nanda's stream in the ML Alignment & Theory Scholars Program - Winter 2023-24 Cohort, with co-supervision from Wes Gurnee.

This post is a preview for our upcoming paper, which will provide more detail into our current understanding of refusal.

We thank Nina Rimsky and Daniel Paleka for the helpful conversations and review.

Executive summary

Modern LLMs are typically fine-tuned for instruction-following and safety. Of particular interest is that they are trained to refuse harmful requests, e.g. answering "How can I make a bomb?" with "Sorry, I cannot help you."

We find that refusal is mediated by a single direction in the residual stream: preventing the model from representing this direction hinders its ability to refuse requests, and artificially adding in this direction causes the model...

TL;DR: In this post, I distinguish between two related concepts in neural network interpretability: polysemanticity and superposition. Neuron polysemanticity is the observed phenomena that many neurons seem to fire (have large, positive activations) on multiple unrelated concepts. Superposition is a specific explanation for neuron (or attention head) polysemanticity, where a neural network represents more sparse features than there are neurons (or number of/dimension of attention heads) in near-orthogonal directions. I provide three ways neurons/attention heads can be polysemantic without superposition: non-neuron aligned orthogonal features, non-linear feature representations, and compositional representation without features. I conclude by listing a few reasons why it might be important to distinguish the two concepts.

Epistemic status: I wrote this “quickly” in about 12 hours, as otherwise it wouldn’t have come out at all. Think of...

Thanks!

I was grouping that with “the computation may require mixing together ‘natural’ concepts” in my head. After all, entropy isn’t an observable in the environment, it’s something you derive to better model the environment. But I agree that “the concept may not be one you understand” seems more central.

In 2021, I proposed measuring progress in the perplexity of language models and extrapolating past results to determine when language models were expected to reach roughly "human-level" performance. Here, I build on that approach by introducing a more systematic and precise method of forecasting progress in language modeling that employs scaling laws to make predictions.

The full report for this forecasting method can be found in this document. In this blog post I'll try to explain all the essential elements of the approach without providing excessive detail regarding the technical derivations.

This approach can be contrasted with Ajeya Cotra's Bio Anchors model, providing a new method for forecasting the arrival of transformative AI (TAI). I will tentatively call it the "Direct Approach", since it makes use of scaling laws...

I'm confused about how heterogeneity in data quality interacts with scaling. Surely training a LM on scientific papers would give different results from training it on web spam, but data quality is not an input to the scaling law... This makes me wonder whether your proposed forecasting method might have some kind of blind spot in this regard, for example failing to take into account that AI labs have probably already fed all the scientific papers they can into their training processes. If future LMs train on additional data that have little to do with science, could that keep reducing overall cross-entropy loss (as scientific papers become a smaller fraction of the overall corpus) but fail to increase scientific ability?

Over the last couple of years, mechanistic interpretability has seen substantial progress. Part of this progress has been enabled by the identification of superposition as a key barrier to understanding neural networks (Elhage et al., 2022) and the identification of sparse autoencoders as a solution to superposition (Sharkey et al., 2022; Cunningham et al., 2023; Bricken et al., 2023).

From our current vantage point, I think there’s a relatively clear roadmap toward a world where mechanistic interpretability is useful for safety. This post outlines my views on what progress in mechanistic interpretability looks like and what I think is achievable by the field in the next 2+ years. It represents a rough outline of what I plan to work on in the near future.

My thinking and work is, of course,...

We propose a simple fix: Use instead of , which seems to be a Pareto improvement over (at least in some real models, though results might be mixed) in terms of the number of features required to achieve a given reconstruction error.

When I was discussing better sparsity penalties with Lawrence, and the fact that I observed some instability in in toy models of super-position, he pointed out that the gradient of norm explodes near zero, meaning that features with "small errors" that cause them to h...

Authors: Senthooran Rajamanoharan*, Arthur Conmy*, Lewis Smith, Tom Lieberum, Vikrant Varma, János Kramár, Rohin Shah, Neel Nanda

A new paper from the Google DeepMind mech interp team: Improving Dictionary Learning with Gated Sparse Autoencoders!

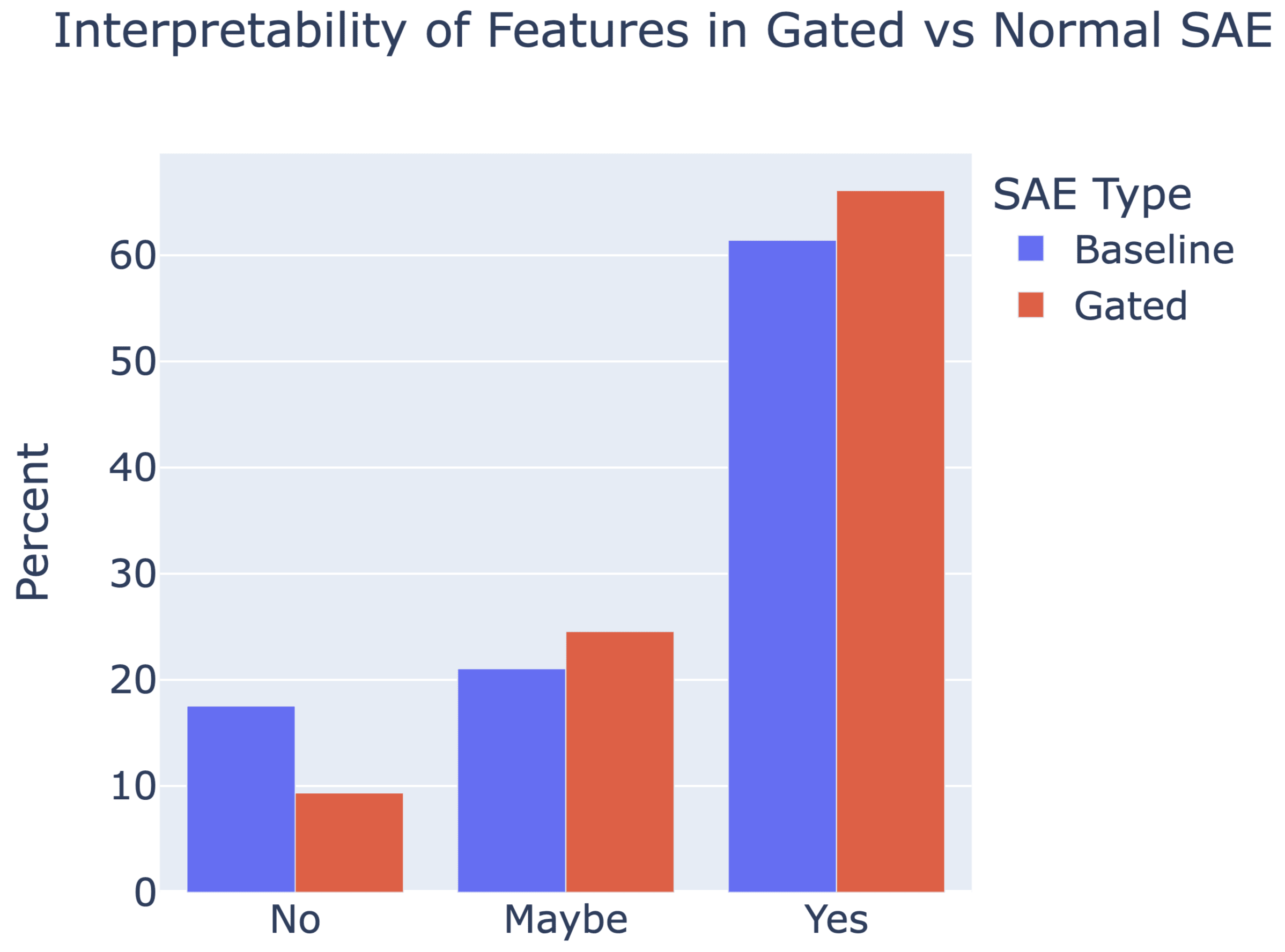

Gated SAEs are a new Sparse Autoencoder architecture that seems to be a significant Pareto-improvement over normal SAEs, verified on models up to Gemma 7B. They are now our team's preferred way to train sparse autoencoders, and we'd love to see them adopted by the community! (Or to be convinced that it would be a bad idea for them to be adopted by the community!)

They achieve similar reconstruction with about half as many firing features, and while being either comparably or more interpretable (confidence interval for the increase is 0%-13%).

See Sen's Twitter summary, my Twitter summary, and the paper!

We use learning rate 0.0003 for all Gated SAE experiments, and also the GELU-1L baseline experiment. We swept for optimal baseline learning rates on GELU-1L for the baseline SAE to generate this value.

For the Pythia-2.8B and Gemma-7B baseline SAE experiments, we divided the L2 loss by , motivated by wanting better hyperparameter transfer, and so changed learning rate to 0.001 or 0.00075 for all the runs (currently in Figure 1, only attention output pre-linear uses 0.00075. In the rerelease we'll state all the values used). We didn't see n...

At some point in the future, AI developers will need to ensure that when they train sufficiently capable models, the weights of these models do not leave the developer’s control. Ensuring that weights are not exfiltrated seems crucial for preventing threat models related to both misalignment and misuse. The challenge of defending model weights has previously been discussed in a RAND report.

In this post, I’ll discuss a point related to preventing weight exfiltration that I think is important and under-discussed: unlike most other cases where a defender wants to secure data (e.g. emails of dissidents or source code), model weights are very large files. At the most extreme, it might be possible to set a limit on the total amount of data uploaded from your inference servers so that...

If anyone wants to work on this or knows people who might, I'd be interested in funding work on this (or helping secure funding - I expect that to be pretty easy to do).

I looked at the paper again and couldn't find anywhere where you do the type of weight-editing this post describes (extracting a representation and then changing the weights without optimization such that they cannot write to that direction).

The LoRRA approach mentioned in RepE finetunes the model to change representations which is different.